import warnings

warnings.filterwarnings('ignore')23 Chains in LangChain

23.1 Outline

- LLMChain

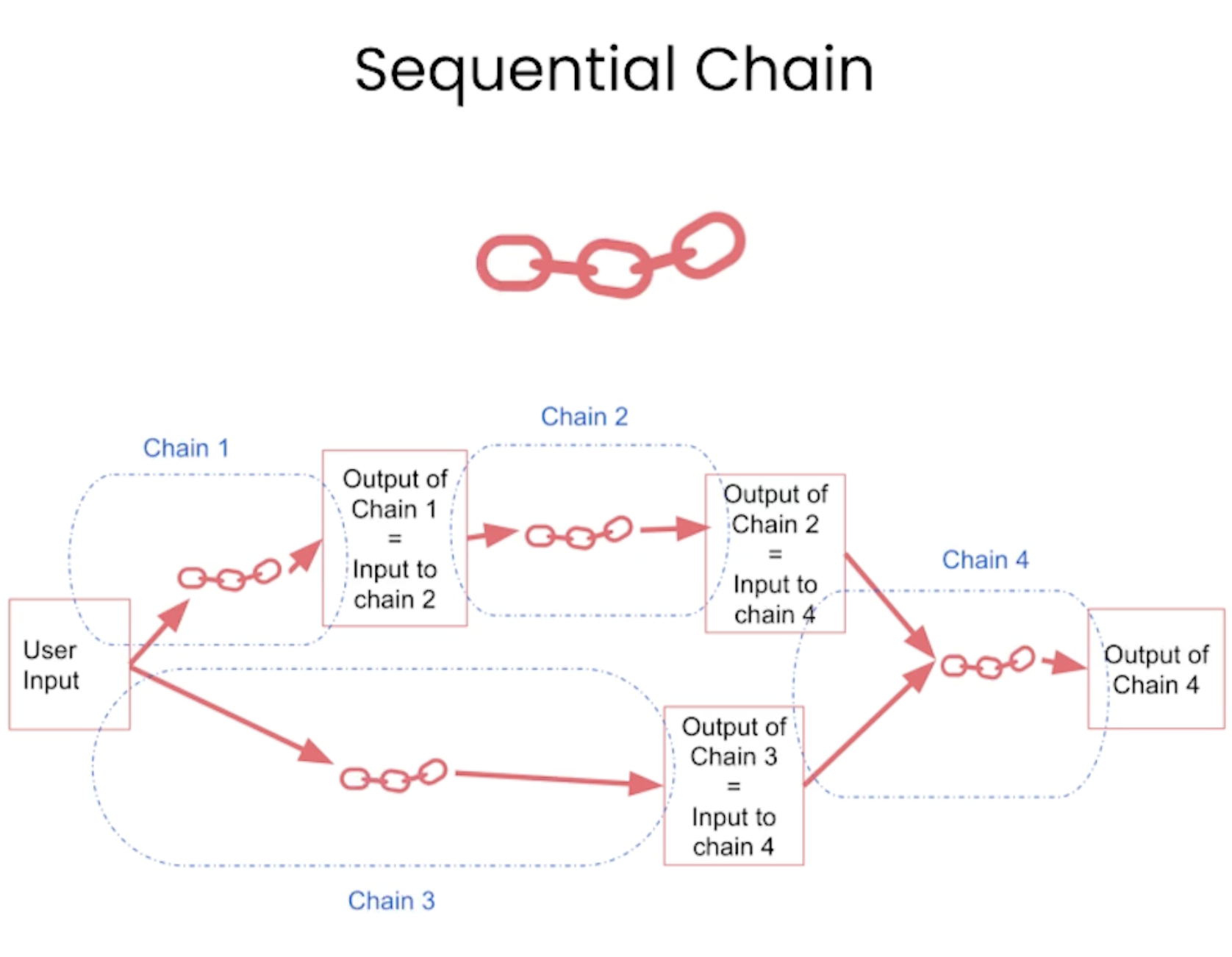

- Sequential Chains

- SimpleSequentialChain

- SequentialChain

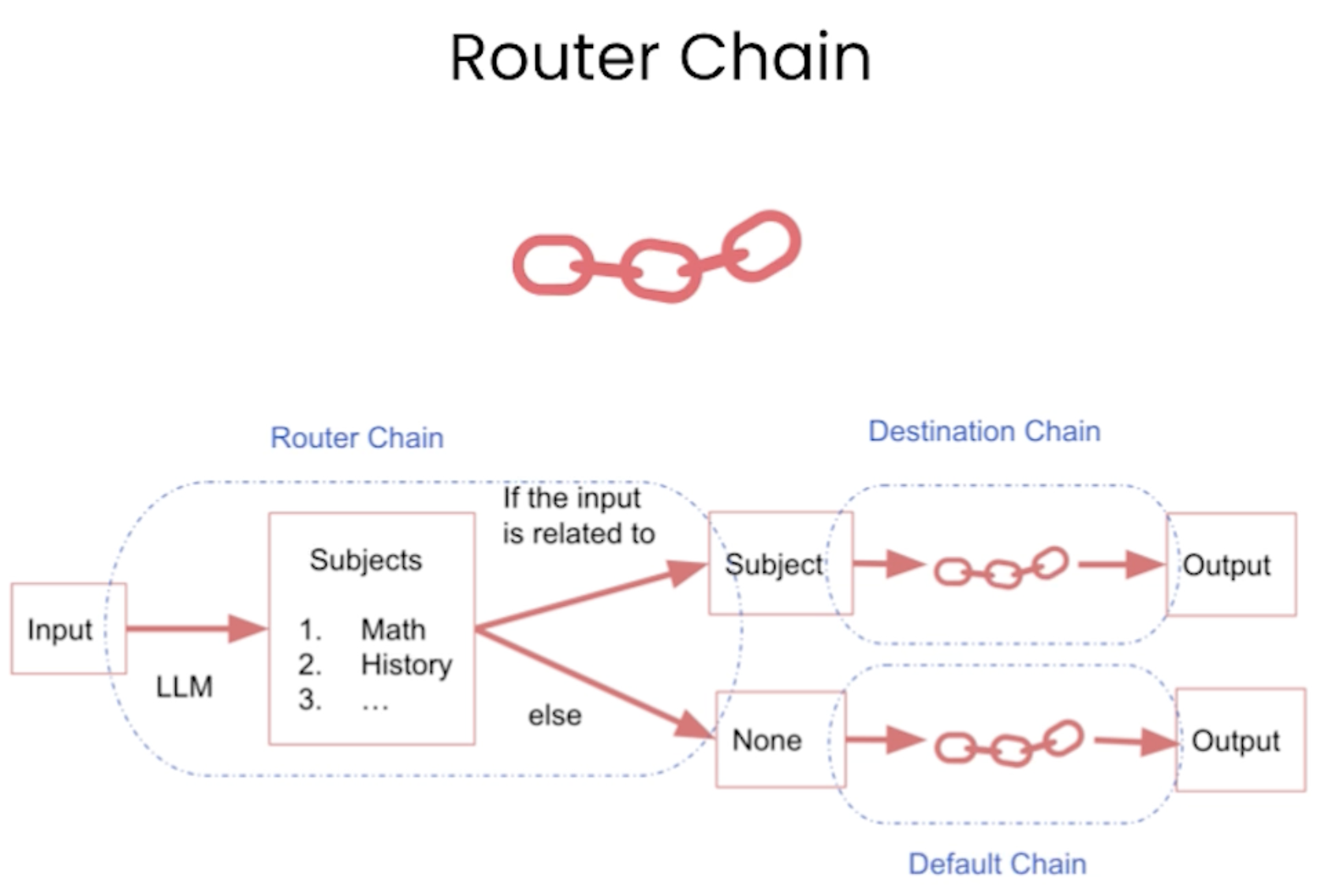

- Router Chain

import os

from dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv()) # read local .env fileNote: LLM’s do not always produce the same results. When executing the code in your notebook, you may get slightly different answers that those in the video.

# account for deprecation of LLM model

import datetime

# Get the current date

current_date = datetime.datetime.now().date()

# Define the date after which the model should be set to "gpt-3.5-turbo"

target_date = datetime.date(2024, 6, 12)

# Set the model variable based on the current date

if current_date > target_date:

llm_model = "gpt-3.5-turbo"

else:

llm_model = "gpt-3.5-turbo-0301"product_reviews = {

"Product": [

"Queen Size Sheet Set",

"Waterproof Phone Pouch",

"Luxury Air Mattress",

"Pillows Insert",

"Milk Frother Handheld"

],

"Review": [

"I ordered a king size set. My only criticism was that the fitted sheet was a little tight, but otherwise, it's a good quality set.",

"I loved the waterproof sac, although the opening mechanism could be improved. It keeps my phone dry during water activities.",

"This mattress had a small hole in the top of it upon arrival. Customer service resolved the issue quickly, but it’s something to be aware of.",

"This is the best throw pillow fillers on Amazon! They are soft, well-filled, and hold their shape nicely even after months of use.",

"I loved this product. But they only seem to last a few months before the motor wears out. It’s great for frothing milk, but durability is lacking."

]

}import pandas as pd

df = pd.DataFrame(product_reviews)df.head()| Product | Review | |

|---|---|---|

| 0 | Queen Size Sheet Set | I ordered a king size set. My only criticism w... |

| 1 | Waterproof Phone Pouch | I loved the waterproof sac, although the openi... |

| 2 | Luxury Air Mattress | This mattress had a small hole in the top of i... |

| 3 | Pillows Insert | This is the best throw pillow fillers on Amazo... |

| 4 | Milk Frother Handheld | I loved this product. But they only seem to la... |

23.2 LLMChain

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.chains import LLMChain, SequentialChainllm = ChatOpenAI(temperature=0.9)prompt = ChatPromptTemplate.from_template(

"What is the best name to describe \

a company that makes {product}?"

)chain = LLMChain(llm=llm, prompt=prompt)

chainLLMChain(prompt=ChatPromptTemplate(input_variables=['product'], messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['product'], template='What is the best name to describe a company that makes {product}?'))]), llm=ChatOpenAI(client=<openai.resources.chat.completions.Completions object at 0x31031b550>, async_client=<openai.resources.chat.completions.AsyncCompletions object at 0x310388af0>, temperature=0.9, openai_api_key='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', openai_proxy=''))product = "Queen Size Sheet Set"

chain.run(product)'"Royal Dream Bedding"'product = "LLM chatbot app using Llama"

chain.run(product)'"LlamaChat"'23.3 SimpleSequentialChain

from langchain.chains import SimpleSequentialChainllm = ChatOpenAI(temperature=0.9)

# prompt template 1

first_prompt = ChatPromptTemplate.from_template(

"What is the best name to describe \

a company that makes {product}?"

)

# Chain 1

chain_one = LLMChain(llm=llm, prompt=first_prompt)# prompt template 2

second_prompt = ChatPromptTemplate.from_template(

"Write a 20 words description for the following \

company: {company_name}"

)

# chain 2

chain_two = LLMChain(llm=llm, prompt=second_prompt)overall_simple_chain = SimpleSequentialChain(chains=[chain_one, chain_two],

verbose=True

)overall_simple_chain.run(product)

> Entering new SimpleSequentialChain chain...

LlamaTalk

LlamaTalk is a cutting-edge communication technology company specializing in unique voice and text messaging solutions for businesses and individuals.

> Finished chain.'LlamaTalk is a cutting-edge communication technology company specializing in unique voice and text messaging solutions for businesses and individuals.'23.4 SequentialChain

from langchain.chains import SequentialChainllm = ChatOpenAI(temperature=0.9)

# prompt template 1: translate to english

first_prompt = ChatPromptTemplate.from_template(

"Translate the following review to english:"

"\n\n{Review}"

)

# chain 1: input= Review and output= English_Review

chain_one = LLMChain(llm=llm, prompt=first_prompt,

output_key="English_Review"

)

chain_one.promptChatPromptTemplate(input_variables=['Review'], messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['Review'], template='Translate the following review to english:\n\n{Review}'))])second_prompt = ChatPromptTemplate.from_template(

"Can you summarize the following review in 1 sentence:"

"\n\n{English_Review}"

)

# chain 2: input= English_Review and output= summary

chain_two = LLMChain(llm=llm, prompt=second_prompt,

output_key="summary"

)

chain_two.promptChatPromptTemplate(input_variables=['English_Review'], messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['English_Review'], template='Can you summarize the following review in 1 sentence:\n\n{English_Review}'))])# prompt template 3: translate to english

third_prompt = ChatPromptTemplate.from_template(

"What language is the following review:\n\n{Review}"

)

# chain 3: input= Review and output= language

chain_three = LLMChain(llm=llm, prompt=third_prompt,

output_key="language"

)

# prompt template 4: follow up message

fourth_prompt = ChatPromptTemplate.from_template(

"Write a follow up response to the following "

"summary in the specified language:"

"\n\nSummary: {summary}\n\nLanguage: {language}"

)

# chain 4: input= summary, language and output= followup_message

chain_four = LLMChain(llm=llm, prompt=fourth_prompt,

output_key="followup_message"

)

# overall_chain: input= Review

# and output= English_Review,summary, followup_message

overall_chain = SequentialChain(

chains=[chain_one, chain_two, chain_three, chain_four],

input_variables=["Review"],

output_variables=["English_Review", "summary","followup_message"],

verbose=True

)

[chain.prompt for chain in overall_chain.chains][ChatPromptTemplate(input_variables=['Review'], messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['Review'], template='Translate the following review to english:\n\n{Review}'))]),

ChatPromptTemplate(input_variables=['English_Review'], messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['English_Review'], template='Can you summarize the following review in 1 sentence:\n\n{English_Review}'))]),

ChatPromptTemplate(input_variables=['Review'], messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['Review'], template='What language is the following review:\n\n{Review}'))]),

ChatPromptTemplate(input_variables=['language', 'summary'], messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['language', 'summary'], template='Write a follow up response to the following summary in the specified language:\n\nSummary: {summary}\n\nLanguage: {language}'))])]df.Review[4]'I loved this product. But they only seem to last a few months before the motor wears out. It’s great for frothing milk, but durability is lacking.'overall_chain(df.Review[4])

> Entering new SequentialChain chain...

> Finished chain.{'Review': 'I loved this product. But they only seem to last a few months before the motor wears out. It’s great for frothing milk, but durability is lacking.',

'English_Review': 'Me encantó este producto. Pero parece que solo duran unos meses antes de que se desgaste el motor. Es excelente para espumar leche, pero la durabilidad deja mucho que desear.',

'summary': 'El producto es excelente para espumar leche, pero su durabilidad es limitada ya que el motor se desgasta después de algunos meses de uso.',

'followup_message': 'Thank you for your feedback on the product. We are glad to hear that it works well for foaming milk. We apologize for the durability issue you experienced with the motor wearing out after a few months of use. We will take this into consideration and work on improving the quality and longevity of the product in the future. Thank you for bringing this to our attention.'}23.4.1 Apply Chain to DF

df['Output'] = df.apply(lambda row: overall_chain(row.Review), axis = 1)

> Entering new SequentialChain chain...

> Finished chain.

> Entering new SequentialChain chain...

> Finished chain.

> Entering new SequentialChain chain...

> Finished chain.

> Entering new SequentialChain chain...

> Finished chain.

> Entering new SequentialChain chain...

> Finished chain.df| Product | Review | Output | |

|---|---|---|---|

| 0 | Queen Size Sheet Set | I ordered a king size set. My only criticism w... | {'Review': 'I ordered a king size set. My only... |

| 1 | Waterproof Phone Pouch | I loved the waterproof sac, although the openi... | {'Review': 'I loved the waterproof sac, althou... |

| 2 | Luxury Air Mattress | This mattress had a small hole in the top of i... | {'Review': 'This mattress had a small hole in ... |

| 3 | Pillows Insert | This is the best throw pillow fillers on Amazo... | {'Review': 'This is the best throw pillow fill... |

| 4 | Milk Frother Handheld | I loved this product. But they only seem to la... | {'Review': 'I loved this product. But they onl... |

23.5 Router Chain

physics_template = """You are a very smart physics professor. \

You are great at answering questions about physics in a concise\

and easy to understand manner. \

When you don't know the answer to a question you admit\

that you don't know.

Here is a question:

{input}"""

math_template = """You are a very good mathematician. \

You are great at answering math questions. \

You are so good because you are able to break down \

hard problems into their component parts,

answer the component parts, and then put them together\

to answer the broader question.

Here is a question:

{input}"""

history_template = """You are a very good historian. \

You have an excellent knowledge of and understanding of people,\

events and contexts from a range of historical periods. \

You have the ability to think, reflect, debate, discuss and \

evaluate the past. You have a respect for historical evidence\

and the ability to make use of it to support your explanations \

and judgements.

Here is a question:

{input}"""

computerscience_template = """ You are a successful computer scientist.\

You have a passion for creativity, collaboration,\

forward-thinking, confidence, strong problem-solving capabilities,\

understanding of theories and algorithms, and excellent communication \

skills. You are great at answering coding questions. \

You are so good because you know how to solve a problem by \

describing the solution in imperative steps \

that a machine can easily interpret and you know how to \

choose a solution that has a good balance between \

time complexity and space complexity.

Here is a question:

{input}"""prompt_infos = [

{

"name": "physics",

"description": "Good for answering questions about physics",

"prompt_template": physics_template

},

{

"name": "math",

"description": "Good for answering math questions",

"prompt_template": math_template

},

{

"name": "History",

"description": "Good for answering history questions",

"prompt_template": history_template

},

{

"name": "computer science",

"description": "Good for answering computer science questions",

"prompt_template": computerscience_template

}

]from langchain.chains.router import MultiPromptChain

from langchain.chains.router.llm_router import LLMRouterChain,RouterOutputParser

from langchain.prompts import PromptTemplatellm = ChatOpenAI(temperature=0)

destination_chains = {}

for p_info in prompt_infos:

name = p_info["name"]

prompt_template = p_info["prompt_template"]

prompt = ChatPromptTemplate.from_template(template=prompt_template)

chain = LLMChain(llm=llm, prompt=prompt)

destination_chains[name] = chain destinations = [f"{p['name']}: {p['description']}" for p in prompt_infos]

destinations_str = "\n".join(destinations)

print(destinations)['physics: Good for answering questions about physics', 'math: Good for answering math questions', 'History: Good for answering history questions', 'computer science: Good for answering computer science questions']default_prompt = ChatPromptTemplate.from_template("{input}")

default_chain = LLMChain(llm=llm, prompt=default_prompt)MULTI_PROMPT_ROUTER_TEMPLATE = """Given a raw text input to a \

language model select the model prompt best suited for the input. \

You will be given the names of the available prompts and a \

description of what the prompt is best suited for. \

You may also revise the original input if you think that revising\

it will ultimately lead to a better response from the language model.

<< FORMATTING >>

Return a markdown code snippet with a JSON object formatted to look like:

```json

{{{{

"destination": string \ name of the prompt to use or "DEFAULT"

"next_inputs": string \ a potentially modified version of the original input

}}}}

```

REMEMBER: "destination" MUST be one of the candidate prompt \

names specified below OR it can be "DEFAULT" if the input is not\

well suited for any of the candidate prompts.

REMEMBER: "next_inputs" can just be the original input \

if you don't think any modifications are needed.

<< CANDIDATE PROMPTS >>

{destinations}

<< INPUT >>

{{input}}

<< OUTPUT (remember to include the ```json)>>"""router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(

destinations=destinations_str

)

print(router_template)

print("\n", "-"*100, "\n")

router_prompt = PromptTemplate(

template=router_template,

input_variables=["input"],

output_parser=RouterOutputParser(),

)

print(router_prompt)

print("\n", "-"*100, "\n")

router_chain = LLMRouterChain.from_llm(llm, router_prompt)

print(router_chain)Given a raw text input to a language model select the model prompt best suited for the input. You will be given the names of the available prompts and a description of what the prompt is best suited for. You may also revise the original input if you think that revisingit will ultimately lead to a better response from the language model.

<< FORMATTING >>

Return a markdown code snippet with a JSON object formatted to look like:

```json

{{

"destination": string \ name of the prompt to use or "DEFAULT"

"next_inputs": string \ a potentially modified version of the original input

}}

```

REMEMBER: "destination" MUST be one of the candidate prompt names specified below OR it can be "DEFAULT" if the input is notwell suited for any of the candidate prompts.

REMEMBER: "next_inputs" can just be the original input if you don't think any modifications are needed.

<< CANDIDATE PROMPTS >>

physics: Good for answering questions about physics

math: Good for answering math questions

History: Good for answering history questions

computer science: Good for answering computer science questions

<< INPUT >>

{input}

<< OUTPUT (remember to include the ```json)>>

----------------------------------------------------------------------------------------------------

input_variables=['input'] output_parser=RouterOutputParser() template='Given a raw text input to a language model select the model prompt best suited for the input. You will be given the names of the available prompts and a description of what the prompt is best suited for. You may also revise the original input if you think that revisingit will ultimately lead to a better response from the language model.\n\n<< FORMATTING >>\nReturn a markdown code snippet with a JSON object formatted to look like:\n```json\n{{\n "destination": string \\ name of the prompt to use or "DEFAULT"\n "next_inputs": string \\ a potentially modified version of the original input\n}}\n```\n\nREMEMBER: "destination" MUST be one of the candidate prompt names specified below OR it can be "DEFAULT" if the input is notwell suited for any of the candidate prompts.\nREMEMBER: "next_inputs" can just be the original input if you don\'t think any modifications are needed.\n\n<< CANDIDATE PROMPTS >>\nphysics: Good for answering questions about physics\nmath: Good for answering math questions\nHistory: Good for answering history questions\ncomputer science: Good for answering computer science questions\n\n<< INPUT >>\n{input}\n\n<< OUTPUT (remember to include the ```json)>>'

----------------------------------------------------------------------------------------------------

llm_chain=LLMChain(prompt=PromptTemplate(input_variables=['input'], output_parser=RouterOutputParser(), template='Given a raw text input to a language model select the model prompt best suited for the input. You will be given the names of the available prompts and a description of what the prompt is best suited for. You may also revise the original input if you think that revisingit will ultimately lead to a better response from the language model.\n\n<< FORMATTING >>\nReturn a markdown code snippet with a JSON object formatted to look like:\n```json\n{{\n "destination": string \\ name of the prompt to use or "DEFAULT"\n "next_inputs": string \\ a potentially modified version of the original input\n}}\n```\n\nREMEMBER: "destination" MUST be one of the candidate prompt names specified below OR it can be "DEFAULT" if the input is notwell suited for any of the candidate prompts.\nREMEMBER: "next_inputs" can just be the original input if you don\'t think any modifications are needed.\n\n<< CANDIDATE PROMPTS >>\nphysics: Good for answering questions about physics\nmath: Good for answering math questions\nHistory: Good for answering history questions\ncomputer science: Good for answering computer science questions\n\n<< INPUT >>\n{input}\n\n<< OUTPUT (remember to include the ```json)>>'), llm=ChatOpenAI(client=<openai.resources.chat.completions.Completions object at 0x17fe807c0>, async_client=<openai.resources.chat.completions.AsyncCompletions object at 0x17fe820e0>, temperature=0.0, openai_api_key='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', openai_proxy=''))chain = MultiPromptChain(router_chain=router_chain,

destination_chains=destination_chains,

default_chain=default_chain, verbose=True

)chain.run("What is black body radiation?")

> Entering new MultiPromptChain chain...

physics: {'input': 'What is black body radiation?'}

> Finished chain."Black body radiation refers to the electromagnetic radiation emitted by a perfect black body, which is an idealized physical body that absorbs all incident electromagnetic radiation. The radiation emitted by a black body depends only on its temperature and follows a specific distribution known as Planck's law. This radiation is characterized by a continuous spectrum of wavelengths and intensities, with the peak intensity shifting to shorter wavelengths as the temperature of the black body increases. Black body radiation plays a key role in understanding concepts such as thermal radiation and the quantization of energy in quantum mechanics."chain.run("what is 2 + 2")

> Entering new MultiPromptChain chain...

math: {'input': 'what is 2 + 2'}

> Finished chain.'The answer to 2 + 2 is 4.'chain.run("Why does every cell in our body contain DNA?")

> Entering new MultiPromptChain chain...

biology: {'input': 'Why does every cell in our body contain DNA?'}--------------------------------------------------------------------------- ValueError Traceback (most recent call last) Cell In[75], line 1 ----> 1 chain.run("Why does every cell in our body contain DNA?") File ~/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain_core/_api/deprecation.py:180, in deprecated.<locals>.deprecate.<locals>.warning_emitting_wrapper(*args, **kwargs) 178 warned = True 179 emit_warning() --> 180 return wrapped(*args, **kwargs) File ~/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain/chains/base.py:600, in Chain.run(self, callbacks, tags, metadata, *args, **kwargs) 598 if len(args) != 1: 599 raise ValueError("`run` supports only one positional argument.") --> 600 return self(args[0], callbacks=callbacks, tags=tags, metadata=metadata)[ 601 _output_key 602 ] 604 if kwargs and not args: 605 return self(kwargs, callbacks=callbacks, tags=tags, metadata=metadata)[ 606 _output_key 607 ] File ~/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain_core/_api/deprecation.py:180, in deprecated.<locals>.deprecate.<locals>.warning_emitting_wrapper(*args, **kwargs) 178 warned = True 179 emit_warning() --> 180 return wrapped(*args, **kwargs) File ~/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain/chains/base.py:383, in Chain.__call__(self, inputs, return_only_outputs, callbacks, tags, metadata, run_name, include_run_info) 351 """Execute the chain. 352 353 Args: (...) 374 `Chain.output_keys`. 375 """ 376 config = { 377 "callbacks": callbacks, 378 "tags": tags, 379 "metadata": metadata, 380 "run_name": run_name, 381 } --> 383 return self.invoke( 384 inputs, 385 cast(RunnableConfig, {k: v for k, v in config.items() if v is not None}), 386 return_only_outputs=return_only_outputs, 387 include_run_info=include_run_info, 388 ) File ~/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain/chains/base.py:166, in Chain.invoke(self, input, config, **kwargs) 164 except BaseException as e: 165 run_manager.on_chain_error(e) --> 166 raise e 167 run_manager.on_chain_end(outputs) 169 if include_run_info: File ~/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain/chains/base.py:156, in Chain.invoke(self, input, config, **kwargs) 153 try: 154 self._validate_inputs(inputs) 155 outputs = ( --> 156 self._call(inputs, run_manager=run_manager) 157 if new_arg_supported 158 else self._call(inputs) 159 ) 161 final_outputs: Dict[str, Any] = self.prep_outputs( 162 inputs, outputs, return_only_outputs 163 ) 164 except BaseException as e: File ~/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain/chains/router/base.py:106, in MultiRouteChain._call(self, inputs, run_manager) 104 return self.default_chain(route.next_inputs, callbacks=callbacks) 105 else: --> 106 raise ValueError( 107 f"Received invalid destination chain name '{route.destination}'" 108 ) ValueError: Received invalid destination chain name 'biology'